Occasionally I will copy a Mercurial repository onto another computer using a USB key (e.g. to work from home, or when I am giving a presentation). What happens when the two computers get out of sync? How do I bring them back in line? As always with DVCS there are several ways to deal with it.

- If you know that changes have only been made in one repo, you could simply use the USB key again to copy the most up to date repo over the older one. This isn’t a recommended way to work, but in simple cases it does the trick.

- Slightly safer would be to copy the newer repo onto the old computer in a different folder, then set that folder up as a new remote location and pull from that. If changes were made on both computers, you’d need to do this both ways, which would be cumbersome.

- If you have a public server you can create repos on, then you can push to the server and pull from the other computer. Bitbucket is excellent for this, with its unlimited free private repos.

However, Mercurial also comes with a handy utility that allows you to sync repos between two computers without the need for a USB key or a central server. All you need is for them to be on the same network.

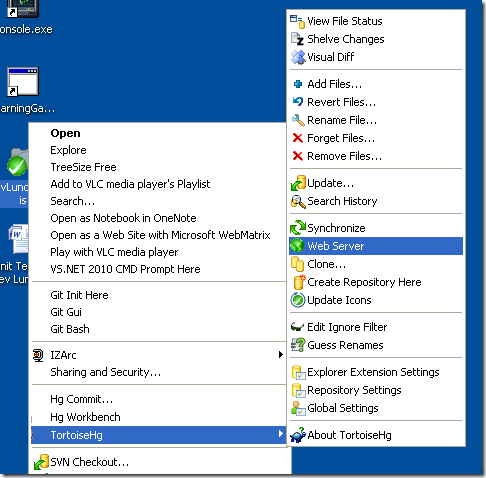

If you have TortoiseHg installed (which I highly recommend for Mercurial development), then you can simply right-click on any Mercurial repository and select TortoiseHg | Web Server.

This launches a very simple web server that underneath is using the hg serve command (e.g. hg serve --port 8000 --debug -R C:\Users\Mark\Code\MyApp).

You can visit this web site in your browser and view the changesets or browse the code.

Now, on your other computer, all you need to do is open up the .hg\hgrc file in your repository in a text editor (you might need to create it), and set up a new path. For example:

[paths] default = http://cpu-1927482d:8000/

Now you can call hg pull -u to get bring in all the changes from your other computer. It is a very simple and quick way to sync a repo between two development computers.

Another great use for this feature is for two developers on a team to share in progress changes with one another that they are not yet ready to submit to the central repository.