I gave a seminar to the developers at my work this week on the subject of how we can keep the overall complexity of our projects down by refactoring methods. Here's some of my key points...

Measure the Complexity of Your Source Code

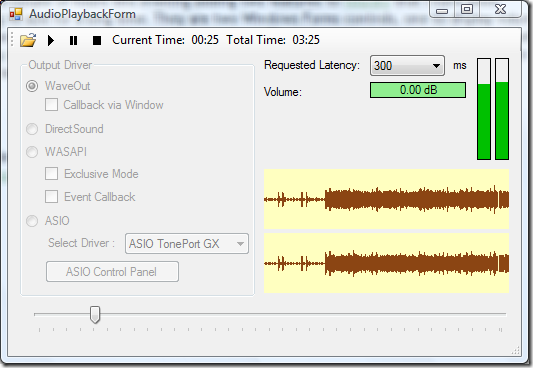

I have been experimenting recently with the excellent freeware application SourceMonitor. This can scan your source code folder (it supports several languages including C#, C++, VB.NET, Java), and give you a report that will help you quickly identify classes with the most methods, methods with the most lines of code, methods with the deepest level of indentation, and methods with the greatest "complexity" rating (which I think is related to how many possible routes through the code there are). You will be surprised at how good this simple tool is at identifying the areas of your code that have the most problems.

Measuring complexity allows you to identify whether new code you have written is over-complicated. It also alerts you to the fact that you may have increased the complexity of the existing code you have modified. If developers make it a goal to leave the code they work on less complex than it was when they started, gradually an overcomplicated codebase can be brought under control.

Complexity Grows Gradually

A competent developer writing new code knows not to make functions that are hugely long and convoluted. But complexity sneaks in gradually, most commonly because of bug fixes or special cases come up. Often these 5-10 line additions are considered so trivial that the method in question does not need to be refactored. But before long, you have gigantic methods that cause headaches for anyone who needs to debug, modify or reuse them.

Consider this simple line of code:

string filename = Path.Combine(folderBrowserDialog.SelectedPath,

String.Format("RecordedItem {0}-{1}-{2}-{3}-{4}-{5} Channel {6}.xml",

recordedItem.StartTime.Year,

recordedItem.StartTime.Month,

recordedItem.StartTime.Day,

recordedItem.StartTime.Hour,

recordedItem.StartTime.Minute,

recordedItem.StartTime.Second,

recordedItem.Channel));

All it is doing is creating a filename. Many developers would not consider putting this into a function of its own (even though it is taking up 9 lines of vertical space). What happens next is a bug report is filed, and suddenly, the code to create a filename gets even longer...

string filename = Path.Combine(folderBrowserDialog.SelectedPath,

String.Format("RecordedItem {0}-{1}-{2}-{3}-{4}-{5} Channel {6}.xml",

recordedItem.StartTime.Year,

recordedItem.StartTime.Month,

recordedItem.StartTime.Day,

recordedItem.StartTime.Hour,

recordedItem.StartTime.Minute,

recordedItem.StartTime.Second,

recordedItem.Channel));

int attempts = 0;

while (File.Exists(filename))

{

attempts++;

if (attempts > 20)

{

log.Error("Could not create a filename for {0}", recordedItem);

throw new InvalidOperationException("Error creating filename");

}

filename = Path.Combine(folderBrowserDialog.SelectedPath,

String.Format("RecordedItem {0}-{1}-{2}-{3}-{4}-{5} Channel {6} {7}.xml",

recordedItem.StartTime.Year,

recordedItem.StartTime.Month,

recordedItem.StartTime.Day,

recordedItem.StartTime.Hour,

recordedItem.StartTime.Minute,

recordedItem.StartTime.Second,

recordedItem.Channel,

attempts));

}

Now we have thirty lines of code all devoted to the relatively simple task of creating a unique filename. Most developers who will work on our code do not need or want to know the details. This code therefore is an ideal candidate for breaking out into a single method:

string filename = GetFilenameForRecordedItem(folderBrowserDialog.Path, recordedItem);

Now developers can skip over this code and only look within the function if they actually need to know how the filename is generated.

Break Long Methods into Individual Tasks

The most common reason for a method being very long, or deeply nested is simply that it is performing more than one task. If a method doesn't fit onto one screen in an IDE, the chances are it does too much.

Methods are not just for code that needs to be reused. It is fine to have private methods that only have one caller. If creating a method makes overall comprehension easier, then that is reason enough to create it.

Let's take another simple example. It is common to see functions that have a comment followed by several lines of code, and then another comment, and then more code, as follows:

private void buttonSearch_Click(object sender, EventArgs args)

{

// validate the user input

... several lines of code

// do the search

... several lines of code

}

When presented like this, it is pretty obvious that the search button click handler performs two discrete tasks, one after the other. The code can therefore be made much more simple to read if it is refactored into...

private void buttonSearch_Click(object sender, EventArgs e)

{

if (IsUserInputValid())

{

PerformSearch();

}

}

Now a developer can quickly see what is going on, and can easily choose which of the two functions to delve deeper into depending on what part of the functionality needs to be changed. It is also interesting to note that we don't need the comments any more. Comments are added when the code isn't self-explanatory. So clear code requires less comments.

Refactor Similar Code, Not Just Duplicated Code

Most developers know not to reuse by cutting and pasting large blocks of code. If exactly the same code is needed in two places, they will move it into a common function. But often the code isn't an exact duplicate, it is simply similar.

Consider this example

XmlNode childNode = xmlDoc.CreateElement("StartTime");

XmlText textNode = xmlDoc.CreateTextNode(recordedItem.StartTime.ToString());

childNode.AppendChild(textNode);

recItemNode.AppendChild(childNode);

childNode = xmlDoc.CreateElement("Duration");

textNode = xmlDoc.CreateTextNode(recordedItem.Duration.ToString());

childNode.AppendChild(textNode);

recItemNode.AppendChild(childNode);

Here we have two very similar blocks of code, but they are not identical. This should not be seen as a barrier to reuse. Without too much effort this duplicated code can be extracted into a helper method. The end result is less complexity and much easier to see the intent.

AddTextNode(recItemNode,"StartTime", recordedItem.StartTime.ToString());

AddTextNode(recItemNode,"Duration", recordedItem.Duration.ToString());

Extract Reusable Functionality into Static Helper Methods

The AddTextNode method in the previous example actually could be used by any class that needed to add a child node to an XML node. So rather than staying buried inside the class that uses it, only for the wheel to be reinvented by the next coder who needs to do a similar task, move it out into a static method in a utility class. In this case, we could create an XmlUtils class. Obviously there is no guarantee that other developers will notice it and use it, but they stand a greater chance of finding it if it resides in a common utilities location rather than hidden away in the code behind of a Windows form.

Use Delegates To Enable Reuse Of Common Surrounding Code

There is one type of cut and paste code that can seem too hard to effectively refactor. Consider the following two functions. They are virtually identical, with the exception that the line of code inside the try block is different.

private void buttonSaveAsXml_Click(object sender, EventArgs args)

{

FolderBrowserDialog folderBrowserDialog = new FolderBrowserDialog();

folderBrowserDialog.Description = "Select folder to save search results to";

if (folderBrowserDialog.ShowDialog() == DialogResult.OK)

{

try

{

SaveAsXml(folderBrowserDialog.SelectedPath);

}

catch (Exception e)

{

log.Error(e, "Error exporting to XML");

MessageBox.Show(this, e.Message, this.Text, MessageBoxButtons.OK, MessageBoxIcon.Warning);

}

}

}

private void buttonSaveAsCsv_Click(object sender, EventArgs args)

{

FolderBrowserDialog folderBrowserDialog = new FolderBrowserDialog();

folderBrowserDialog.Description = "Select folder to save search results to";

if (folderBrowserDialog.ShowDialog() == DialogResult.OK)

{

try

{

SaveAsCsv(folderBrowserDialog.SelectedPath);

}

catch (Exception e)

{

log.Error(e, "Error exporting to CSV");

MessageBox.Show(this, e.Message, this.Text, MessageBoxButtons.OK, MessageBoxIcon.Warning);

}

}

}

The answer is to make use of delegates. We can take all the common code and put it into a SelectSaveFolder function, and pass in the action to do once the folder has been selected as a delegate. I've used the new C#3 lambda syntax, but ordinary or anonymous delegates work just as well:

delegate void PerformSave(string path);

void SelectSaveFolder(PerformSave performSaveFunction)

{

FolderBrowserDialog folderBrowserDialog = new FolderBrowserDialog();

folderBrowserDialog.Description = "Select folder to save search results to";

if (folderBrowserDialog.ShowDialog() == DialogResult.OK)

{

try

{

performSaveFunction(folderBrowser.SelectedPath);

}

catch (Exception e)

{

log.Error(e, "Error exporting");

MessageBox.Show(this, e.Message, this.Text, MessageBoxButtons.OK, MessageBoxIcon.Warning);

}

}

}

private void buttonSaveAsXml_Click(object sender, EventArgs args)

{

SelectSaveFolder(path => SaveAsXml(path));

}

private void buttonSaveAsCsv_Click(object sender, EventArgs args)

{

SelectSaveFolder(path => SaveAsCsv(path));

}

The benefit here is that the two button click handlers which previously had a lot of duplicated code now show very simply what they will do. And that is the point of refactoring your methods. Make them show the intent, not the implementation details.

Use IDE Refactoring Support

Finally, remember to use the Visual Studio Refactor | Extract Method command wherever possible. It can't always work out exactly what you would like it to do, but it can save you a lot of time and avoid a lot of silly mistakes if you make a habit of using it. (If you have a tool like Resharper then I'm sure that's even better).