Most developers who learn C# pick up the basic keywords quite quickly. Within a few weeks of working with a typical codebase you’ll have come across around a third of the C# keywords, and understand roughly what they do. You should have no trouble explaining what the following keywords mean:

public, private, protected, internal, class, namespace, interface, get, set, for, foreach .. in, while, do, if, else, switch, break, continue, new, null, var, void, int, bool, double, string, true, false, try, catch

However, while doing code reviews I have noticed that some developers get stuck with a limited vocabulary of keywords and never really get to grips with some of the less common ones, and so miss out on their benefits. So here’s a list, in no particular order, of some keywords that you should not just understand, but be using on a semi-regular basis in your own code.

is & as

Sometimes I come across a variation of the following code, where we want to cast a variable to a different type but would like to check first if that cast is valid:

if (sender.GetType() == typeof(TextBox))

{

TextBox t = (TextBox)sender;

...

}

While this works fine, the is keyword could be used to simplify the if clause:

if (sender is TextBox)

{

TextBox t = (TextBox)sender;

...

}

We can improve things further by using the as keyword, which is like a cast, but doesn’t throw an exception if the conversion is not valid – it just returns null instead. This means we can write code in a way that doesn’t require the .NET framework to check the type of our sender variable twice:

TextBox t = sender as TextBox;

if (t != null)

{

...

}

I feel obliged to add that if your code contains a lot of casts, you are probably doing something wrong, but that is a discussion for another day.

using

Most developers are familiar with the using keyword for importing namespaces, but a surprising number do not make regular use of it for dealing with objects that implement IDisposable. For example, consider the following code:

var writer = new StreamWriter("test.txt");

writer.WriteLine("Hello World");

writer.Dispose();

What we have here is a potential resource leak if there is an exception thrown between opening the file and closing it. The using keyword ensures that Dispose will always be called if the writer object was successfully created.

using (var writer = new StreamWriter("test.txt"))

{

writer.WriteLine("Hello World");

}

Make it a habit to check whether the classes you create implement IDisposable, and if so, make use of the using keyword.

finally

Which brings us onto our next keyword, finally. Even developers who know and use using often miss appropriate scenarios for using a finally block. Here’s a classic example:

public void Update()

{

if (this.updateInProgress)

{

log.WriteWarning("Already updating");

return;

}

this.updateInProgress = true;

...

DoUpdate();

...

this.updateInProgress = false;

}

The code is trying to protect us from some kind of re-entrant or multithreaded scenario where an Update can be called while one is still in progress (please ignore the potential race condition for the purposes of this example). But what happens if there is an exception thrown within DoUpdate? Now we are never able to call Update again because our updateInProgress flag never got unset. A finally block ensures we can’t get into this invalid state:

public void Update()

{

if (this.updateInProgress)

{

log.WriteWarning("Already updating");

return;

}

try

{

this.updateInProgress = true;

...

DoUpdate();

...

}

finally

{

this.updateInProgress = false;

}

}

readonly

OK, this one is a fairly simple one, and you could argue that code works just fine without it. The readonly keyword says that a field can only be written to from within the constructor. It’s handy from a code readability point of view, since you can immediately see that this is a field whose value will never change during the lifetime of the class. It also becomes a more important keyword as you begin to appreciate the benefits of immutable classes. Consider the following class:

public class Person

{

public string FirstName { get; private set; }

public string Surname { get; private set; }

public Person(string firstName, string surname)

{

this.FirstName = firstName;

this.Surname = surname;

}

}

Person is certainly immutable from the outside – no one can change the FirstName or Surname properties. But nothing stops me from modifying those properties within the class. In other words, my code doesn’t advertise that I intend this to be an immutable class. Using the readonly keyword, we can express our intent better:

public class Person

{

private readonly string firstName;

private readonly string surname;

public string FirstName { get { return firstName; } }

public string Surname { get { return surname; } }

public Person(string firstName, string surname)

{

this.firstName = firstName;

this.surname = surname;

}

}

Yes, it’s a shame that this second version is a little more verbose than the first, but it makes it more explicit that we don’t want firstName or surname to be modified during the lifetime of the class. (Sadly C# doesn’t allow the readonly keyword on properties).

yield

This is a very powerful and yet rarely used keyword. Suppose we have a class that searches our hard disk for all MP3 files and returns their paths. Often we might see it written like this:

public List<string> FindAllMp3s()

{

var mp3Paths = List<string>();

...

// fill the list

return mp3Paths;

}

Now we might use that method to help us search for a particular MP3 file we had lost:

foreach(string mp3File in FindAllMp3s())

{

if (mp3File.Contains("elvis"))

{

Console.WriteLine("Found it at: {0}", mp3File);

break;

}

}

Although this code seems to work just fine, it’s performance is sub-optimal, since we first find every MP3 file on the disk, and then search through that list. We could save ourselves a lot of time if we checked after each file we found and aborted the search at that point.

The yield keyword allows us to fix this without changing our calling code at all. We modify FindAllMp3s to return an IEnumerable<string> instead of a List. And now every time it finds a file, we return it using the yield keyword. So with some rather contrived example helper functions (.NET 4 has already added a method that does exactly this) our FindAllMp3s method looks like this:

public IEnumerable<string> FindAllMp3s()

{

var mp3Paths = List<string>();

for (var dir in GetDirs())

{

for (var file in GetFiles(dir))

{

if (file.EndsWith(".mp3")

{

yield return file;

}

}

}

}

This not only saves us time, but it saves memory too, since we now don’t need to store the entire collection of mp3 files in a List.

It can take a little while to get used to debugging this type of code since you jump in and out of a function that uses yield repeatedly as you walk through the sequence, but it has the power to greatly improve the design and performance of the code you write and is worth mastering.

select

OK, this one is cheating since this is a whole family of related keywords. I won’t attempt to explain LINQ here, but it is one of the best features of the C# language, and you owe it to yourself to learn it. It will revolutionise the way you write code. Download LINQPad and start working through the tutorials it provides.

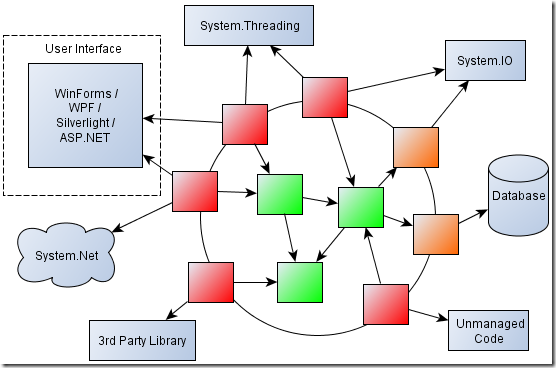

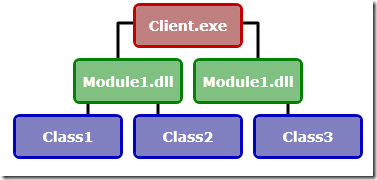

interface

So you already know about this keyword. But you probably aren’t using it nearly enough. The more you write code that is testable and adheres to the Dependency Inversion Principle, the more you will need it. In fact at some point you will grow to hate how much you are using it and wish you were using a dynamic language instead. (dynamic is itself another very interesting new C# keyword, but I feel that the C# community is only just beginning to discover how we can best put it to use).

throw

You do know you are allowed to throw as well as catch exceptions right? Some developers seem to think that a function should never let any exceptions get away, and so contain a generic catch block which writes an error to the log and returns giving the caller no indication that things went wrong.

This is almost always wrong. Most of the time your methods should simply allow exceptions to propagate up to the caller. If you are using the using keyword correctly, you are probably already doing all the cleanup you need to.

But you can and should sometimes throw exceptions. An exception thrown at the point you realise something is wrong with a good error message can save hours of debugging time.

Oh, and if you really do need to catch an exception and re-throw it, make sure you use do it the correct way.

goto

Only joking, pretend you didn’t see this one. Just because a keyword is in the language, doesn’t mean it is a good idea to use it. out and ref usually fall into this category too – there are better ways to write your code.